A-Frame is a web framework for building 3D/AR/VR experiences using a combination of HTML and Javascript.

A-Frame is based on three.js and has a large community, as well as lots of community-made custom elements and components.

<html>

<head>

<script src="https://aframe.io/releases/1.2.0/aframe.min.js"></script>

</head>

<body>

<a-scene>

<a-box position="-1 0.5 -3" rotation="0 45 0" color="#4CC3D9"></a-box>

<a-sphere position="0 1.25 -5" radius="1.25" color="#EF2D5E"></a-sphere>

<a-cylinder position="1 0.75 -3" radius="0.5" height="1.5" color="#FFC65D"></a-cylinder>

<a-plane position="0 0 -4" rotation="-90 0 0" width="4" height="4" color="#7BC8A4"></a-plane>

<a-sky color="#ECECEC"></a-sky>

</a-scene>

</body>

</html>

Babylon.js is an easy to use real-time 3D game engine built using TypeScript. It has full WebXR support out of the box, including gaze and teleportation support, AR experimental features and more. To simplify WebXR development Babylon.js offers the WebXR Experience Helper, which is the one-stop-shop for all XR-related functionalities.

To get started use the Babylon.js playground, or try these demos:

- https://playground.babylonjs.com/#PPM311

- https://playground.babylonjs.com/#JA1ND3#164

To start on your own use this simple template:

<!DOCTYPE html>

<html>

<head>

<meta http-equiv="Content-Type" content="text/html" charset="utf-8" />

<title>Babylon - Getting Started</title>

<!--- Link to the last version of BabylonJS --->

<script src="https://preview.babylonjs.com/babylon.js"></script>

<style>

html,

body {

overflow: hidden;

width: 100%;

height: 100%;

margin: 0;

padding: 0;

}

#renderCanvas {

width: 100%;

height: 100%;

touch-action: none;

}

</style>

</head>

<body>

<canvas id="renderCanvas"></canvas>

<script>

window.addEventListener('DOMContentLoaded', async function () {

// get the canvas DOM element

var canvas = document.getElementById('renderCanvas');

// load the 3D engine

var engine = new BABYLON.Engine(canvas, true);

// createScene function that creates and return the scene

var createScene = async function () {

// create a basic BJS Scene object

var scene = new BABYLON.Scene(engine);

// create a FreeCamera, and set its position to (x:0, y:5, z:-10)

var camera = new BABYLON.FreeCamera('camera1', new BABYLON.Vector3(0, 5, -10), scene);

// target the camera to scene origin

camera.setTarget(BABYLON.Vector3.Zero());

// attach the camera to the canvas

camera.attachControl(canvas, false);

// create a basic light, aiming 0,1,0 - meaning, to the sky

var light = new BABYLON.HemisphericLight('light1', new BABYLON.Vector3(0, 1, 0), scene);

// create a built-in "sphere" shape; its constructor takes 6 params: name, segment, diameter, scene, updatable, sideOrientation

var sphere = BABYLON.Mesh.CreateSphere('sphere1', 16, 2, scene);

// move the sphere upward 1/2 of its height

sphere.position.y = 1;

// create a built-in "ground" shape;

var ground = BABYLON.Mesh.CreateGround('ground1', 6, 6, 2, scene);

// Add XR support

var xr = await scene.createDefaultXRExperienceAsync({/* configuration options, as needed */})

// return the created scene

return scene;

}

// call the createScene function

var scene = await createScene();

// run the render loop

engine.runRenderLoop(function () {

scene.render();

});

// the canvas/window resize event handler

window.addEventListener('resize', function () {

engine.resize();

});

});

</script>

</body>

</html>

For advanced examples and documentation see the Babylon.js WebXR documentation page

Model viewer is a custom HTML element for displaying 3D models and vieweing them in AR

AR

<!-- Import the component -->

<script type="module" src="https://unpkg.com/@google/model-viewer/dist/model-viewer.js"></script>

<script nomodule src="https://unpkg.com/@google/model-viewer/dist/model-viewer-legacy.js"></script>

<!-- Use it like any other HTML element -->

<model-viewer src="examples/assets/Astronaut.glb" ar alt="A 3D model of an astronaut" auto-rotate camera-controls background-color="#455A64"></model-viewer>

Needle Engine is a web engine for complex and simple 3D applications alike. It is flexible, extensible and has built-in support for collaboration and XR! It is built around the glTF standard for 3D assets.

Powerful integrations for Unity and Blender allow artists and developers to collaborate and manage web applications inside battle-tested 3d editors. Needle Engine integrations allow you to use editor features for exporting models, author materials, animate and sequence animations, bake lightmaps and more with ease.

Our powerful and easy to use compression and optimization pipeline for the web make sure your files are ready, small and load fast!

Follow the Getting Started Guide to download and install Needle Engine. You can also find a list of sample projects that you can try live in the browser and download to give your project a headstart.

For writing custom components read the Scripting Guide.

p5.xr is an add-on for p5.js, a Javascript library that makes coding accessible for artists, designers, educators, and beginners. p5.xr adds the ability to run p5 sketches in Augmented Reality or Virtual Reality.

p5.xr also works in the p5.js online editor, simply add a script tag pointing to the latest p5.xr release in the index.html file.

<!DOCTYPE html>

<html>

<head>

<script src="https://cdn.jsdelivr.net/npm/[email protected]/lib/p5.js"></script>

<script src="https://github.com/stalgiag/p5.xr/releases/download/0.3.2-rc.3/p5xr.min.js"></script>

</head>

<body>

<script>

function preload() {

createVRCanvas();

}

function setup() {

setVRBackgroundColor(0, 0, 255);

angleMode(DEGREES);

}

function draw() {

rotateX(-90);

fill(0, 255, 0);

noStroke();

plane(10, 10);

}

</script>

</body>

</html>

PlayCanvas is an open-source game engine. It uses HTML5 and WebGL to run games and other interactive 3D content in any mobile or desktop browser.

Full documentation available on the PlayCanvas Developer site including API reference. Also check out XR tutorials with sources using online Editor as well as engine-only examples and their source code.

Below is basic example of setting up PlayCanvas application, simple scene with light and some cubes aranged in grid. And Immersive VR session on click/touch if WebXR is supported:

<!DOCTYPE html>

<html lang="en">

<head>

<title>PlayCanvas Basic VR</title>

<meta charset="utf-8">

<script src="https://unpkg.com/playcanvas"></script>

<style type="text/css">

body {

margin: 0;

overflow: hidden;

}

canvas {

width: 100%;

height: 100%;

}

</style>

</head>

<body>

<canvas id="canvas"></canvas>

<script>

let canvas = document.getElementById('canvas');

// create application

let app = new pc.Application(canvas, {

mouse: new pc.Mouse(canvas),

touch: new pc.TouchDevice(canvas)

});

// set resizing rules

app.setCanvasFillMode(pc.FILLMODE_FILL_WINDOW);

app.setCanvasResolution(pc.RESOLUTION_AUTO);

// handle window resize

window.addEventListener("resize", function () {

app.resizeCanvas(canvas.width, canvas.height);

});

// use device pixel ratio

app.graphicsDevice.maxPixelRatio = window.devicePixelRatio;

// start an application

app.start();

// create camera

let cameraEntity = new pc.Entity();

cameraEntity.addComponent("camera", {

clearColor: new pc.Color(0.3, 0.3, 0.3)

});

app.root.addChild(cameraEntity);

// create light

let light = new pc.Entity();

light.addComponent("light", {

type: "spot",

range: 30

});

light.translate(0,10,0);

app.root.addChild(light);

let SIZE = 8;

// create floor plane

let plane = new pc.Entity();

plane.addComponent("model", {

type: "plane"

});

plane.setLocalScale(SIZE * 2, 1, SIZE * 2);

app.root.addChild(plane);

// create a grid of cubes

for (let x = 0; x < SIZE; x++) {

for (let z = 0; z < SIZE; z++) {

let cube = new pc.Entity();

cube.addComponent("model", {

type: "box"

});

cube.setPosition(2 * x - SIZE + 1, 0.5, 2 * z - SIZE + 1);

app.root.addChild(cube);

}

}

// if XR is supported

if (app.xr.supported) {

// handle mouse / touch events

let onTap = function (evt) {

// if immersive VR supported

if (app.xr.isAvailable(pc.XRTYPE_VR)) {

// start immersive VR session

cameraEntity.camera.startXr(pc.XRTYPE_VR, pc.XRSPACE_LOCALFLOOR);

}

evt.event.preventDefault();

evt.event.stopPropagation();

};

// attach mouse / touch events

app.mouse.on("mousedown", onTap);

app.touch.on("touchend", onTap);

}

</script>

</body>

</html>

react-xr is a collection of hooks to help you build XR experiences in react-three-fiber applications.

To make a VR React application we’ll use the following stack:

Three.js is a library for 3D graphics, react-three-fiber is react renderer for Three.js, drei is a collection of reusable components for r3f and react-xr is a collection of hooks to help you build XR experiences in react-three-fiber applications.

react-xr

As soon as you have a 3D scene using react-three-fiber you can make it available in VR or AR with react-xr.

For that, the only thing you need to do is to replace <Canvas> component with <VRCanvas> or <ARCanvas> from react-xr package. It’s still the same canvas component but with all additional wiring necessary for VR to function.

Take a look at those simple example here:

VR

https://codesandbox.io/s/react-xr-simple-demo-8i9ro

AR

https://codesandbox.io/s/react-xr-simple-ar-demo-8w8hm

You’ll notice that you now have “Enter VR/AR” button available at the bottom of the screen that should start the experience.

Adding controllers

To add controllers you can use a component from react-xr package called <DefaultXRControllers/>. It will load appropriate controller models and put them in a scene.

<VRCanvas>/* or ARCanvas */

<DefaultXRControllers />

</VRCanvas>

Interactivity

To interact with objects using controllers you can use <Interactive> component or useInteraction hook. They allow adding handlers to your objects. All interactions are rays that are shot from the controllers.

here is a short example

const [isHovered, setIsHovered] = useState(false)

return (

<Interactive onSelect={() => console.log('clicked!')} onHover={() => setIsHovered(true)} onBlur={() => setIsHovered(false)}>

<Box />

</Interactive>

)

You can also see this method in the two VR and AR examples aboves

Learn more

We barely scratched the surface of what is possible with libraries like react-three-fiber and react-xr, I encourage you to check out more examples in GitHub repositories here and here. Remember, every r3f scene can be easily adjusted to be available in WebXR.

Three.js is a cross-browser JavaScript library used to create and display animated 3D computer graphics in a web browser. It has a large community, good docs, and many examples.

Using VR is largely the same as regular Three.js applications. Setup the scene, camera, and renderer. The major difference

is setting the vr.enabled flag to true on the renderer. There is an optional VRButton class to make a button that

will enter and exit VR for you.

For more info, see this guide to VR in Three.js and the WebXR examples.

Here is a full example that sets up a scene with a rotating red cube.

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<title>Title</title>

<style type="text/css">

body {

margin: 0;

background-color: #000;

}

canvas {

display: block;

}

</style>

</head>

<body>

<script type="module">

// Import three

import * as THREE from 'https://unpkg.com/three/build/three.module.js';

// Import the default VRButton

import { VRButton } from 'https://unpkg.com/three/examples/jsm/webxr/VRButton.js';

// Make a new scene

let scene = new THREE.Scene();

// Set background color of the scene to gray

scene.background = new THREE.Color(0x505050);

// Make a camera. note that far is set to 100, which is better for realworld sized environments

let camera = new THREE.PerspectiveCamera(50, window.innerWidth / window.innerHeight, 0.1, 100);

camera.position.set(0, 1.6, 3);

scene.add(camera);

// Add some lights

var light = new THREE.DirectionalLight(0xffffff,0.5);

light.position.set(1, 1, 1).normalize();

scene.add(light);

scene.add(new THREE.AmbientLight(0xffffff,0.5))

// Make a red cube

let cube = new THREE.Mesh(

new THREE.BoxGeometry(1,1,1),

new THREE.MeshLambertMaterial({color:'red'})

);

cube.position.set(0, 1.5, -10);

scene.add(cube);

// Make a renderer that fills the screen

let renderer = new THREE.WebGLRenderer({antialias: true});

renderer.setPixelRatio(window.devicePixelRatio);

renderer.setSize(window.innerWidth, window.innerHeight);

// Turn on VR support

renderer.xr.enabled = true;

// Set animation loop

renderer.setAnimationLoop(render);

// Add canvas to the page

document.body.appendChild(renderer.domElement);

// Add a button to enter/exit vr to the page

document.body.appendChild(VRButton.createButton(renderer));

// For AR instead, import ARButton at the top

// import { ARButton } from 'https://unpkg.com/three/examples/jsm/webxr/ARButton.js';

// then create the button

// document.body.appendChild(ARButton.createButton(renderer));

// Handle browser resize

window.addEventListener('resize', onWindowResize, false);

function onWindowResize() {

camera.aspect = window.innerWidth / window.innerHeight;

camera.updateProjectionMatrix();

renderer.setSize(window.innerWidth, window.innerHeight);

}

function render(time) {

// Rotate the cube

cube.rotation.y = time / 1000;

// Draw everything

renderer.render(scene, camera);

}

</script>

</body>

</html>

Here is a full example of an immersive-ar demo made using three.js

<!DOCTYPE html>

<html lang="en">

<head>

<title>three.js ar - cones</title>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0, user-scalable=no">

<link type="text/css" rel="stylesheet" href="main.css">

</head>

<body>

<div id="info">

<a href="https://threejs.org" target="_blank" rel="noopener">three.js</a> ar - cones<br/>

</div>

<script type="module">

import * as THREE from 'https://unpkg.com/three/build/three.module.js';

import { ARButton } from 'https://unpkg.com/three/examples/jsm/webxr/ARButton.js';

var container;

var camera, scene, renderer;

var controller;

init();

animate();

function init() {

container = document.createElement( 'div' );

document.body.appendChild( container );

scene = new THREE.Scene();

camera = new THREE.PerspectiveCamera( 70, window.innerWidth / window.innerHeight, 0.01, 20 );

var light = new THREE.HemisphereLight( 0xffffff, 0xbbbbff, 1 );

light.position.set( 0.5, 1, 0.25 );

scene.add( light );

//

renderer = new THREE.WebGLRenderer( { antialias: true, alpha: true } );

renderer.setPixelRatio( window.devicePixelRatio );

renderer.setSize( window.innerWidth, window.innerHeight );

renderer.xr.enabled = true;

container.appendChild( renderer.domElement );

//

document.body.appendChild( ARButton.createButton( renderer ) );

//

var geometry = new THREE.CylinderBufferGeometry( 0, 0.05, 0.2, 32 ).rotateX( Math.PI / 2 );

function onSelect() {

var material = new THREE.MeshPhongMaterial( { color: 0xffffff * Math.random() } );

var mesh = new THREE.Mesh( geometry, material );

mesh.position.set( 0, 0, - 0.3 ).applyMatrix4( controller.matrixWorld );

mesh.quaternion.setFromRotationMatrix( controller.matrixWorld );

scene.add( mesh );

}

controller = renderer.xr.getController( 0 );

controller.addEventListener( 'select', onSelect );

scene.add( controller );

//

window.addEventListener( 'resize', onWindowResize, false );

}

function onWindowResize() {

camera.aspect = window.innerWidth / window.innerHeight;

camera.updateProjectionMatrix();

renderer.setSize( window.innerWidth, window.innerHeight );

}

//

function animate() {

renderer.setAnimationLoop( render );

}

function render() {

renderer.render( scene, camera );

}

</script>

</body>

</html>

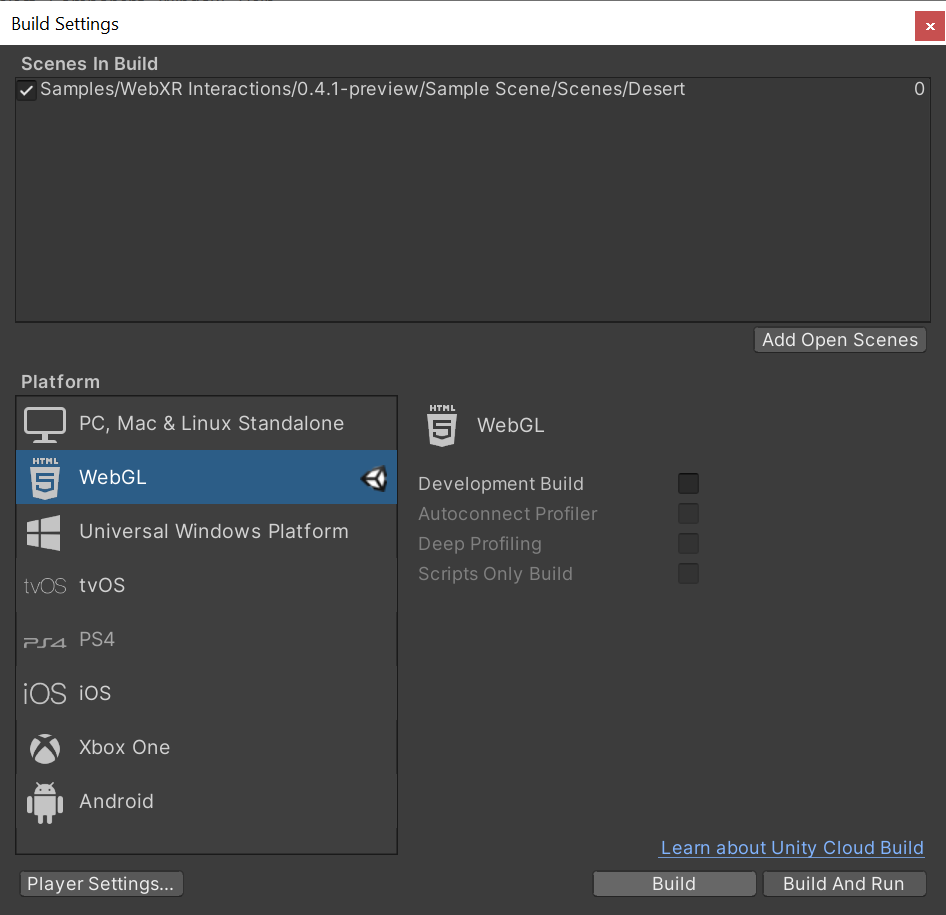

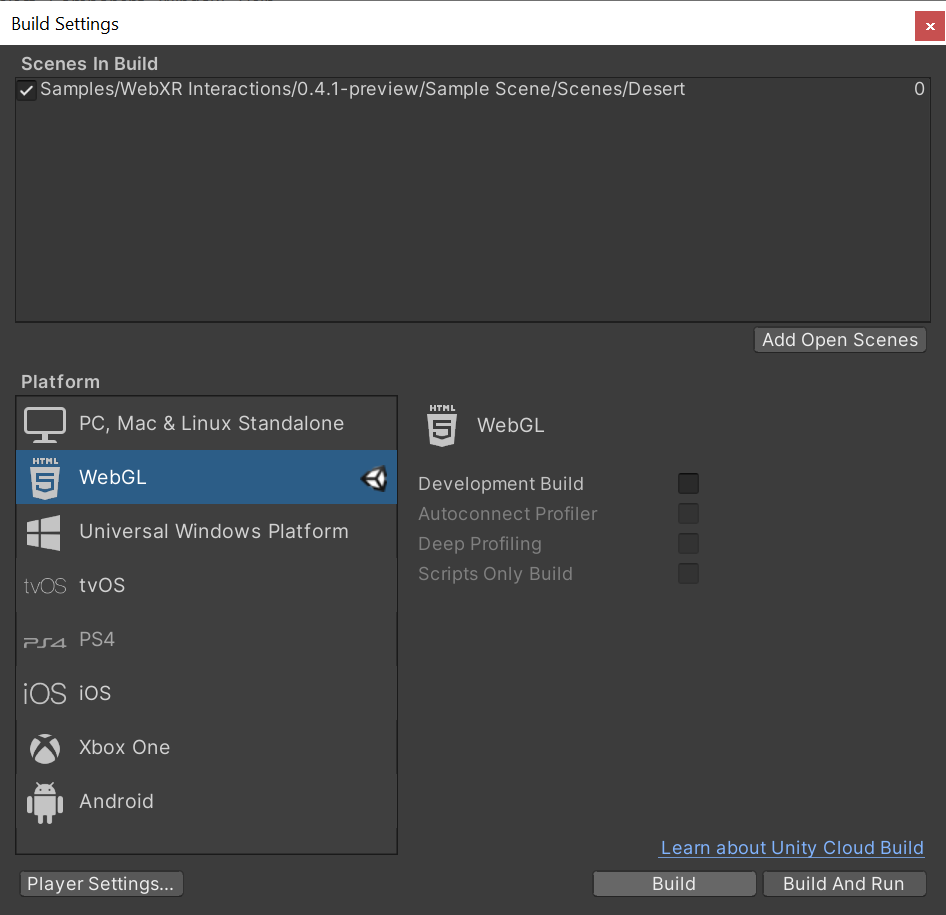

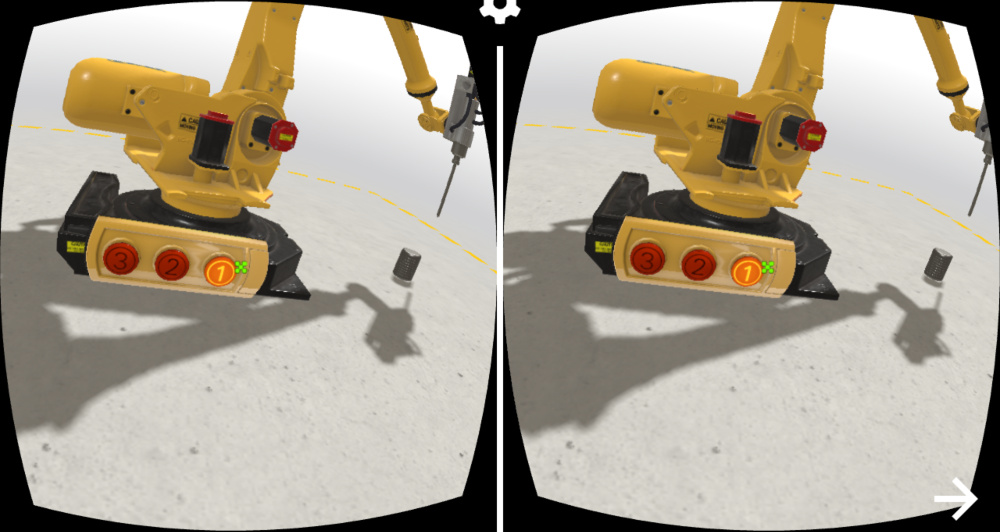

Unity is a GUI based game engine. It has a number of unofficial WebXR extensions.

Create a new Unity Project (2019.4.7f1 and up in the 2019.4.x cycle).

Switch platform to WebGL.

Import WebXR Export and WebXR Interactions packages from OpenUPM.

Once packages are imported, Go to Window > WebXR > Copy WebGLTemplates.

After WebGLTemplates are in the Assets folder, Open the XR Plug-in Management tab in the Project Settings window and select the WebXR Export plug-in provider.

Now you can import the Sample Scene from Window > Package Manager > WebXR Interactions > Import into Project.

In Project Settings > Player > Resolution and Presentation, select WebXR as the WebGL Template.

Now you can build the project.

Make sure to build it from Build Settings > Build. Unity’s Build And Run server use HTTP. Run the build on your own HTTPS server.

That’s it.

Verge3D is an artist-friendly toolkit that allows Blender, 3ds Max, or Maya artists to create immersive web-based experiences. Verge3D can be used to build interactive animations, product configurators, engaging presentations of any kind, online stores, explainers, e-learning content, portfolios, and browser games.

Setting up Virtual Reality

We recommend to enable the Legacy VR option in app creation settings in the App Manager in order to support a wider range of browsers (such as Mozilla Firefox) and devices.

Cardboard devices should work out of the box in any mobile browser, both on Android and iOS.

Google Daydream works in stable Chrome browser on Android phones while HTC and Oculus devices should work in both Chrome and Firefox browsers.

Plese note that WebXR requires a secure context. Verge3D apps must be served over HTTPS/SSL, or from the localhost URL.

The VR mode can be set up for any Verge3D app using enter VR mode puzzle.

Interaction with 3D objects is performed by using the gaze-based reticle pointer automatically provided for VR devices without controllers (such as cardboards).

For VR devices with controllers, interaction is performed by the virtual ray casted from the controllers.

You can use the standard when hovered or when clicked puzzles to capture user events as well as VR-specific on session event.

Setting up Augmented Reality

You can run your Verge3D-based augmented reality applications on mobile devices with Anroid or iOS/iPadOS operating systems.

Android

To enable augmented reality, you need an Android device which supports ARCore technology and latest Google Chrome browser. You also need to install Google Play Services for AR. The installation of this package is prompted automatically upon entering AR mode for the first time, if not pre-installed.

iOS/iPadOS

Mozilla’s WebXR Viewer is a Firefox-based browser application which supports the AR technology on Apple devices (starting from iPhone 6s). Simply install it from the App Store.

Creating AR Apps

The AR mode can be set up for any Verge3D app using the enter AR mode puzzle.

Upon entering AR mode you will be able to position your 3D content in the “real” coordinate system, which is aligned with your mobile device. In addition to that, you can detect horizontal surfaces (tables, shelves, floor etc) by using the detect horizontal surface AR puzzle.

Also, to see the the real environment through your 3D canvas, you should enable the transparent background option in the configure application puzzle.

What’s Next

Check out the User Manual for more info on creating AR/VR applications with Verge3D or see the tutorials for beginners on YouTube.

Got Questions?

Feel free to ask on the forums!

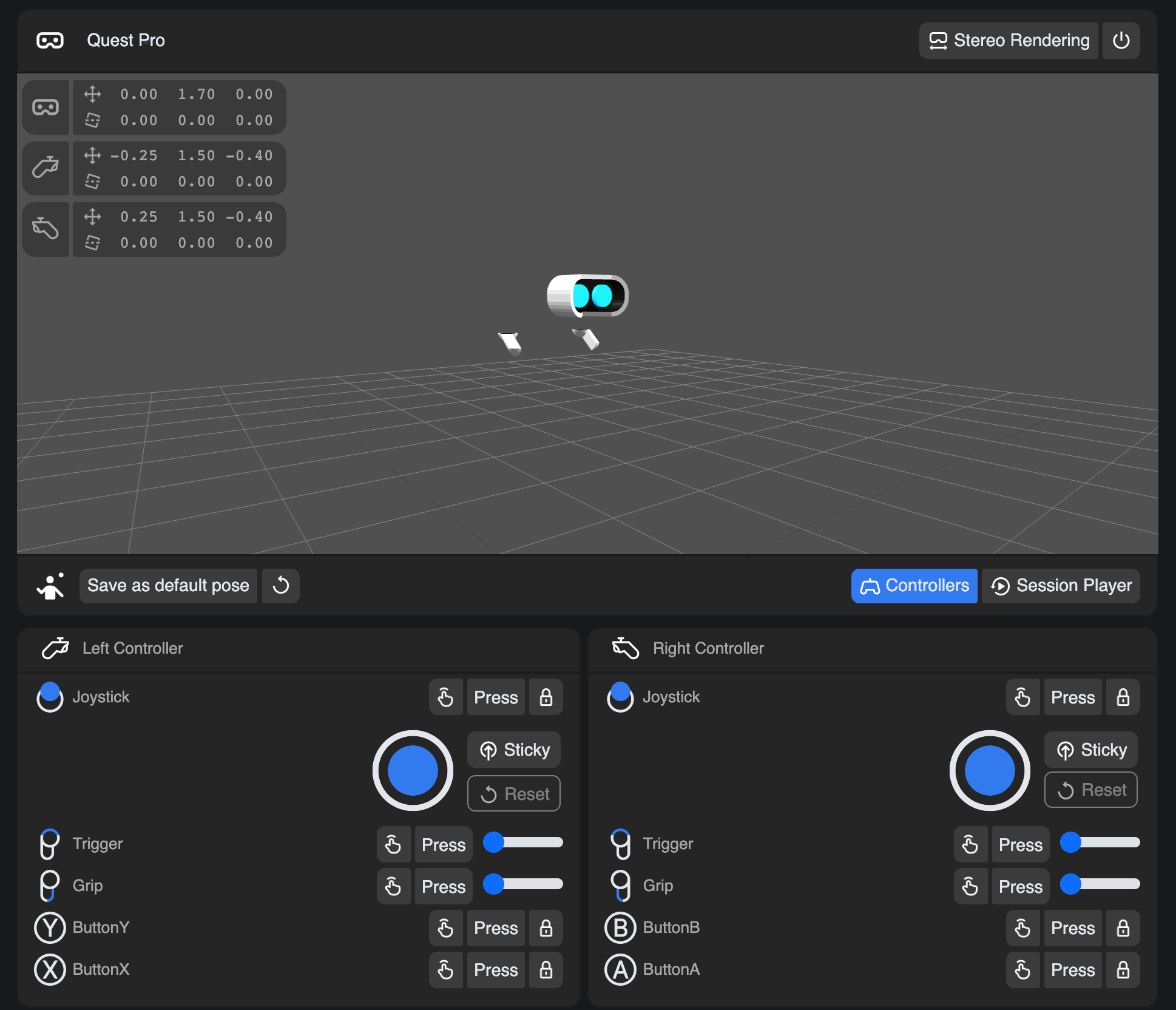

Wonderland Engine is a highly performant WebXR focused development platform.

The Wonderland Editor (Windows, MacOS, Linux) makes WebXR development accessible and provides a very efficient workflow,

e.g. by reloading the browser for you whenever your files change.

WebAssembly and optimizations like automatically batching your scene allow you to draw many objects without having to worry

about performance.

Start with the Quick Start Guide and find a list of examples

to help you get started.

To start writing custom code, check out the JavaScript Getting Started Guide

and refer to the JavaScript API Documentation.

Click on a tab to begin.